TL;DR: Once again, Carmack was right. Go Mobile, Young Man, Go Mobile.

Holy Jeebus, that’s right! I have a design and prototyping blog too, no, no, just kidding, I didn’t forget about it, in fact, I have so much stub content written that’s just waiting to be finished and published that you’d think...whatever one thinks about the aforementioned situation? More book reviews in the works, some mindset stuff as well, just need to get out from under May (Mai? insert joke about hot asian women and reverse cowgirl?) and content will start flowing again.

Mmm...Mai. More great work at http://pop-lee.deviantart.com/

To that, I’ve been in Android development land for the last couple weeks, working on...oh, of course it’s something I can’t talk about yet, you should all know that by now, but if I pull it off, it’ll be pretty cool. I can say that it may end up being a stage demo for Google I/O, and while my specific part of it won’t be that cool in and of itself, the total package I think’ll be pretty sweet and I actually hope it’s something that people pick up on. Like most of the tech I'm working with, I don't know how consumer friendly it is, but I think in the right hands it'll be inspirational, and could definitely pave the way for consumer uptake. Hopefully I haven't just violated my work NDA...

Anyway, after two or three weeks of learning Android basics, Android Studio, and OpenGL ES, I’ve finally got a build running on a device, my trusty Tango tablet, well, actually more like my poor, neglected Tango tablet that I haven’t done anything with yet, but I digress...Yeah, it was a bit of a long process, but that’s how I learn new languages and platforms, more on that later. It’s a little thing, but I'm actually really excited by the simple prospect of having an OpenGL ES app fully deployed to a platform, Android, well, "mobile" development has certainly become more of an interest in the last few weeks. If you’ve been thinking about developing for Android and ESPECIALLY developing OpenGL ES based stuff...DO IT!! The world needs more resources and more folks actively banging on this stuff for things other than games, so Join Me In My Quest!!

The Fellowship of the...AVD?

Seriously, it's a bit of a wild west, there aren't a ton of resources available for learning OpenGL ES on Android, and god help you if you're trying to learn the Android build chain AND OpenGL ES at the same time. The currently existing resources, well, they're a bit spotty on effectiveness, at least in my opinion, but again, once Mai is done with me, I'll try and put together my findings, hopefully they'll be useful to someone, and I encourage more folks to do the same. I'm sorta tempted to do an ES 3 tutorial series similar to what I did with my Cinder::gl Tutorials, but ES 3.0 is close enough to stock OpenGL that you could just learn OGL 3.2/3 and then brush up on the Android (or whatever your platform of choice's) buildchain. Hmm...maybe a "Learning OpenGL ES with Android Studio"? ...and I haven't even touched on Tango/Tegra stuff, which is a whole kraken unto itself...

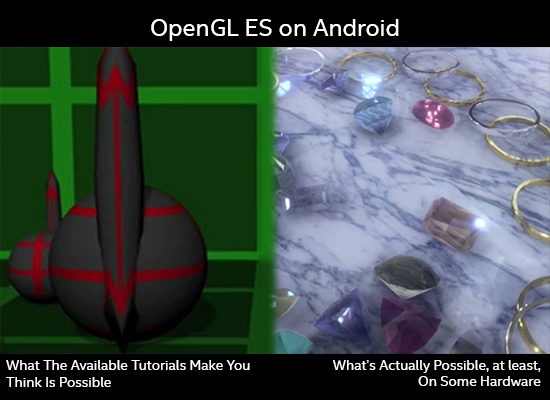

Why my sudden love, or at least technolust, for mobile development? Well, I know some folks are certainly given to agree with the below image and are probably thinking “Android, but that’s just for silly little apps, and none of the hardware platforms have the power/perf metrics to do anything REALLY cool,” well...I disagree with this, or at least, I disagree based on what I see when I look three to five years down the road, what with the likes of the Tegra X1 and beyond, ES 3, Vulkan, and whatever the Tango evolves into, all these things add up to a platform just ready to be taken forward into the future. If you have a modern android device with a decent mobile GPU, you should check out the Yebis 2 OpenGL ES 3.0 Demo on Google Play, which is from whence the shiny part of the below image cometh (cameth?). Sure, the kicker is having an OpenGL ES 3.0 capable mGPU, and I know, I know, a demo is not a full app and doesn't have the same power/perf profile (don't you be tellin' my nu'n 'bout demos, BOY!), etc, etc, but if we should all know anything about hardware, it's that it doesn't stagnate, and I don't see that trend slowing down.

True Story, Bro.

So I’m excited now, mobile has come to mean something completely different to me in the last couple of weeks, mobile isn’t just phones and tablets, it’s Oculus, it’s Vive, it’s HoloLens, it’s most certainly things like GearVR and Wearality, but it’s also the intersection of all those things and current mobile devices and paradigms. Gotta say, at first I thought Carmack was a little crazy, but no, I think I get where he’s coming from and I’m on board for it. My big issue with VR and HMDs up till now has been the idea of people just sitting alone in dark rooms with displays wrapped around their heads, and I think that's definitely an acceptable use scenario, but I didn't want it to be the only one. Given the new perspective that I think we can all take on "mobile", I don't think it will be.

Alright, back to it. Nothing earth-shattering or groundbreaking here for sure, just some thoughts. I just got back from //build, and I'm definitely excited about Windows 10 and Universal Windows Platform too, so I'm not just a late-to-the-party Android sycophant, well, based on what I've said, I may actually be early to some party, so I think I'm alright. The mobile future is certainly shaping up to be...interesting times. Here's to it, and hopefully my next post will have some actual useful stuff (curse you, Mai! But keep doing what you're doing...). I'll leave with a bit of shiny just so this post isn't totally useless, a short vid of my aforementioned first build, gotta love that early 90s demoscene aesthetic! Cheers!