Gah, ok ok, I promised myself i'd stop talking smack about former large software companies i may or may not have worked for. I dunno it's weird, if you're like me, you always feel like once you leave a company in bad blood, you're forever professionally competing with them as long as you're in the same industry. Not to put too fine a point on anything, or anything, hehe...

Aaaanyway, so i feel like i'm at a good enough spot with KinectCam to drop some knowledge on some folks if you're interested in playing with this stuff yourself. I gotta say I might have written Kinect off a bit early, I'm having a good time playing around with it and its ilk. Granted, none of the games I've ever played on Kinect are doing the sort of stuff I've been doing, I sorta wonder what kind of crazy game you could come up with using the depth stream...Ok, sure, i'm just reaching there, but probably not, I bet someone (Jeff Minter) could come up with some insane depth stream game. Actually I'm thinking of some sort of pattern recog app that i can feed my CD collection into or maybe just stuff the audio array, you know anything to avoid this sort of thing (Hmm, this would be funnier if you didn't have to squint for the text):

Alright, well, mindless self indulgence aside, let's get to this MS Kinect SDK wrapper. Odd how this will probably be the only such post I make that's going to apply to this particular wrapper, therein crawling another bug up my nose, the fact that I wish MS would just release an official Unity wrapper/plugin/whatever. Truth be told though, it probably wouldn't be terribly hard to compile down my own DLL, it's probably more of a time issue to make sure I expose and marshall everything properly, not to mention proper SDK. But SDK specifics aside, all the maths herein should be pretty easy to re-apply since it's just a few simple space conversion tricks.

So recap from my previous post, make sure you install the following softwarez:

- Unity

- Kinect Beta SDK - Now that i'm out of demo land, I'm going to make a concerted effort to figure out release vs beta, there are some interesting jumping off points

- Kinect Wrapper

- Kinect Interface

- DirectX June 2010 SDK

- DirectX End-User Runtime - I feel now like this might be optional, but install it anyway if you're interested in doing independent Kinect/DX development

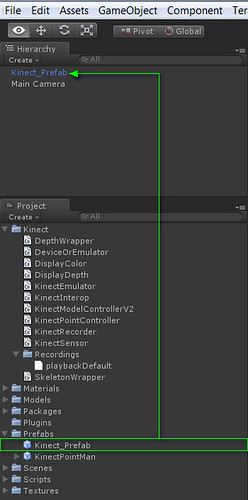

Alright, so to get stuff working in this environment is pretty simple. Import the KinectWrapperPackage and you'll have all the scripts and objects you need to get started. I recommend just starting with a blank scene and build up for there, altho you could pop open the KinectExample scene and make blobs dance around wildly.

Once you have a new scene (taxing task that that is), all that's required to make it Kinect-aware is to drag a Kinect_Prefab from the Project into the scene, as so:

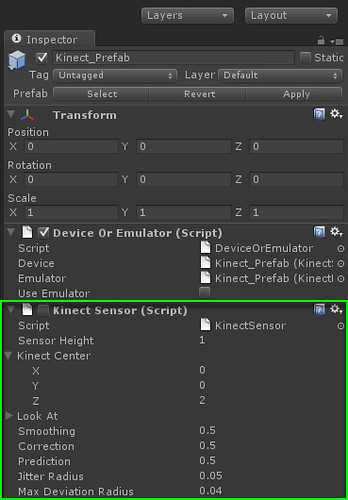

This scene doesn't actually do anything yet, obviously, but you can use the Kinect_Prefab to setup your camera. If you play the scene, you may notice the camera moving around a bit and focusing in odd places (hey buddy, my eyes are UP HERE), which can be fixed by setting some values in the KinectSensor attached to the Kinect_Prefab:

I've been using the included Skeletal Viewer in the SDK to make sure i'm getting good coverage, once you find some numbers that work, you can always go into the KinectSensor code and set your own defaults if you want.

Alrighty then, time to get the camera moving. So, I went the hack route and used Unity's built-in MouseLook script as a jumping off point, as it only required a few minor changes to the camera transform code. The part that requires a bit of work is getting Kinect skeletal numbers into Unity friendly numbers, and when i say "bit", i mean it, it's actually not that hard. So the first thing I did was for my own sanity, and that's setup a little GUI indicator that tells me if I'm tracking or not. Polling the camera for the depth image in this wrapper is a bit annoying because you have to restart Unity every time you stop running. Might be a fun project to figure that out and re-implement depth and color display as a GUI texture. Something to keep in mind for my next project. Anyway, so verifying tracking is pretty simple, i put this all in a script called FromBonePosition:

Pretty straightforward, we're just pulling some functionality from the included SkeletonWrapper class, which...well, wraps Skeleton functionality. Truth be told, I feel like this class is a bit of an extra, but whatever. Anyway, breaking down the code:

- Really all pollSkeleton() does is call the native NuiSkeletonGetNextFrame(), i'll leave what that probably does as an exercise to the reader.

- trackedPlayers[] is interesting. The details of how we get there are a bit unimportant at this stage, the important note is that we're grabbing an enum that tells us what we're tracking. KinectWrapper initializes the values in trackedPlayers[] to -1 which indicates that we haven't even acquired a skeleton. The possible enums are NUI_SKELETON_NOT_TRACKED, NUI_SKELETON_POSITION_ONLY, and NUI_SKELETON_TRACKED. So if either of the two players is acquired, we can say we've tracked.

- So once we've tracked, we spit out a string to the UI that tells us what's up.

Joints are pretty easy to poll, as the SkeletonWrapper class provides a few different arrays that contain joint information and the SDK provides us a convenient enum for specifying which joint to query for data. So let's add some code to our class:

Simple, efficient (like the body itself), we add a variable to hold the right hand's position, then we pull a value from the bonePos[] array on the SkeletonWrapper. You'll notice bonePos[] is a 2D array, the first id is which skeleton to poll (we might be tracking two players), the second id is pretty obviously the joint we want to get the position for. You can see the full enum definition in the KinectInterop class if you should ever want to poll a different joint, or if you want to update a bunch of joints, or a whole skeleton, or make tea, or something like that. We output that number to the GUI too, a) for verification purposes and b) so we can set some other numbers later.

By now we pretty much know all the kinect stuff we need to know to get our camera moving, so let's get our camera look on. Here's our member list (with comments!):

- Find the distance (as a percent) between the minimum and maximum x (oldX) that we get from the Kinect

- Repeat for y

- Cast that into (-1,1) so it mimics Unity's mouse input values

- Rotate the camera

- Profit, or at least impress your friends (or your mom)

So yeah, we're basically parroting MouseLook's rotation setting and replacing Input.GetAxis() with the data we poll from the Kinect. This SHOULD work, as it's almost verbatim the code I was using minus things like aim assist and project specific passing. Play around with the OldX and OldY values, the Kinect SDK says that values for X can lie between (-2.2, 2.2) and Y can be (-1.6,1.6), but that's going to vary a bit depending on where you're hanging out. For example, the values we ended up using were OldX = (-0.3,0.7) and OldY = (1.3,2.4). Drop a texture onto the gui and set the rectangle based on hand position, that's a pretty simple way to get some good debug. It's not too tricky to cast your value into screen space, try it out!

I think that's all I got for now, going to try and port this to OpenNI/PrimeSense just for fun this weekend too.

This is what I wanted to be doing when I left my last job. I haven't had a chance to dust off the Kinect in a while maybe this will provoke me to doing a little tinkering again.

ReplyDeleteI just picked up a use one still in the box for $75, I'm reading to start making things move! Thanks for the links, is Brekel any good from what you have seen? I'm starting with that for now.

ReplyDeleteDepends on what you're trying to do. The brekel stuff I believe is based off of OpenNI/NITE, so that might be a good place to start if you're looking to do your own development. We've been using the Windows Kinects recently, and I've gotta say they're definitely worth the investment. Getting features for the 360 Kinect is probably going to get a little trickier, but the Windows SDK keeps getting updated. The newest release has things like Near Field and Upper Body tracking, so probably worth looking into if you're serious about NUI development...

ReplyDeleteHey.. I am also using this wrapper even though, there is no recent update on it. The thing is that i have this project that i have to find most of its hiccups, it was been developed under the zigfu bindings. I made a kind of research and i can see that the skeleton tracking that this framework offers is not sufficient and I really want a solid and stable skeleton tracking for the basis of my system. The project that i am working right now is related with gesture recognition and monitoring the exercise of the user. I believe also that the microsoft kinect sdk is the direction that i will turn to. Anyway, first of all i want to ask you whether you are aware of any other good MS Kinect SDK unity wrapper??? And the secondly, whether you can suggest any recent kinect project related to gesture recognition??

ReplyDeletethanks a lot for your help. and your post was exactly what i was looking for.

Microsoft has their own Kinect Unity wrapper, but they haven't released it yet, i'm not sure what the schedule is. They also just announced some sort of hand and gesture recognition for an upcoming sdk release, here's a bit of info on that:

Deletehttp://www.theverge.com/2013/3/6/4069598/kinect-for-windows-hand-detection-hands-on

Hope that helps!

again, thanks. every info for me is important!

DeleteNo problem, i do alot of work with Kinect and other depth cameras/APIs, so if you need help with code or anything related, please don't hesitate to ask. Good Luck! Your project sounds really interesting.

Deletethanks a lot Seth. Well, if we take as example the above script which polls the Kinect for a bone position by recreating a mouse driver, how can i use it so that i can show whether the right hand is above the head and when is under a certain angle?

Delete