Recently, us lucky saps in the Perceptual Computing Lab have been fortunate enough to be doing some prototypes for different external groups, and I've actually been lucky enough to work with one of my favorite groups, [REDACTED]! Needless to say, I'm super excited, and one of the first projects I'm working on involves using depth data and object/user segmentation data to interact with virtual/digital content, much like the ever popular kinect/box2d experiments you've probably seen floating around...

Depth buffer, OpenCV, cinder::Triangulator, and Box2D, seemed pretty straightforward, I mean let's be honest, that's creative coding 101, right? That's what I thought, but as usual, the devil's in the details, and after some (not terribly extensive) searching and a little bit more coding I had...eh, well...nothing. My code looked correct, but no meshes were drawn that day, and even in a cursory inspection of my TriMesh2ds, there was nary a vertex to be seen. Here's what I tried originally (this is sketch code, so yeah, the pattern is a little sloppy):

//cv contours are in mContours, mMeshes is vector<TriMesh2d>

for(auto vit=mContours.begin();vit!=mCountours.end();++vit)

{

Shape2d cShape;

vector<cv::Point> contour = *vit;

auto pit=contour.begin();

cShape.moveTo(pit->x,pit->y); ++pit;

for(/* nothing to see here */;pit!=contour.end();++pit)

{

cShape.lineTo(pit->x, pit->y);

cShape.moveTo(pit->x, pit->y);

}

cShape.close;

Triangulator tris(cShape);

mMeshes.push_back(tris.calcMesh());

}

for(auto vit=mContours.begin();vit!=mCountours.end();++vit)

{

Shape2d cShape;

vector<cv::Point> contour = *vit;

auto pit=contour.begin();

cShape.moveTo(pit->x,pit->y); ++pit;

for(/* nothing to see here */;pit!=contour.end();++pit)

{

cShape.lineTo(pit->x, pit->y);

cShape.moveTo(pit->x, pit->y);

}

cShape.close;

Triangulator tris(cShape);

mMeshes.push_back(tris.calcMesh());

}

Right, so at this point, it should be a simple exercise in gl::draw()ing the contents of mMeshes, yeah? Sadly, this method yields no trimesh for you!, and as I mentioned above, even a quick call to getNumVertices() revealed that there were, in fact, no vertices for you!, either. The docs on Triangulator lead me to believe that you can just call the constructor with a Shape2d and you should be good to go, and a quick test reveals that constructing a Triangulator with other objects does in fact yield all the verts you could ever want, so methinks maybe it's an issue with the Shape2d implementation, or perhaps I'm building my Shape2d wrong. I rule the latter out, though (well, not decisively), since Triangulator has the concept of invalid inputs, e.g. if you don't close() your Shape2d, the constructor throws, so...what to do, what to do? To the SampleCave!

TRIANGULATE AGAIN, ONE YEAR! NEXT!

Mike Bostock, he of d3.js fame gave a great talk at eyeo festival last year on the importance of good examples (Watch it on Vimeo), and you know, it's so true. It's sorta like documentation, we employ technical writers for that sorta thing, I feel like we should at least give some folks a solid contract to put together good sample code for whatever we're foisting onto the world, rather than relegating samples to free time and interns (no offense to either free time or interns). Now Cinder has amazing sample code, so a quick google search for TriMesh2d popped up the PolygonBoolean sample, which was basically doing what I wanted, i.e. constructing and drawing a TriMesh2d from a Shape2d...kinda. I trust the good folks at Team Cinder to not ship sample code that doesn't work, so a quick build 'n' run later and I had a solution. I was sooooo close...

//cv contours are in mContours, mMeshes is vector<TriMesh2d>

for(auto vit=mContours.begin();vit!=mCountours.end();++vit)

{

PolyLine2f cShape;

vector<cv::Point> contour = *vit;

for(auto pit=contour.begin();pit!=contour.end();++pit)

{

cShape.push_back(fromOcv(*pit));

}

Triangulator tris(cShape);

mMeshes.push_back(tris.calcMesh());

}

for(auto vit=mContours.begin();vit!=mCountours.end();++vit)

{

PolyLine2f cShape;

vector<cv::Point> contour = *vit;

for(auto pit=contour.begin();pit!=contour.end();++pit)

{

cShape.push_back(fromOcv(*pit));

}

Triangulator tris(cShape);

mMeshes.push_back(tris.calcMesh());

}

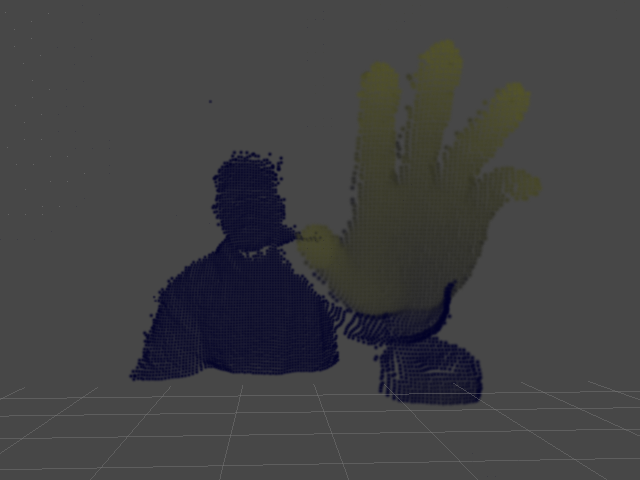

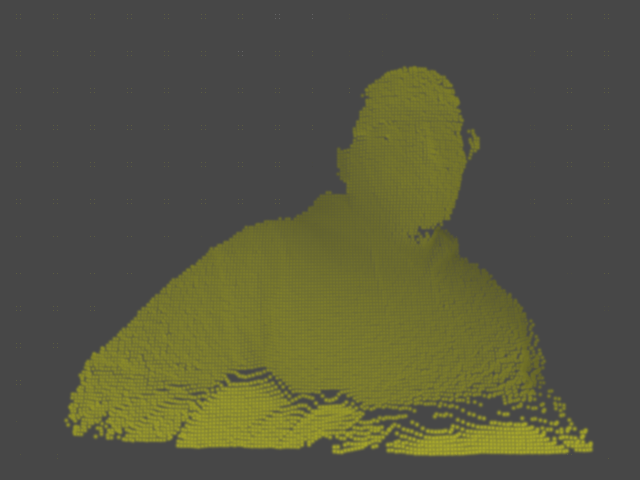

The results? Well, see for yourself:

My tribute to Harold Ramis, may you never end up in one of your own traps, sir.

Next steps are to maybe run some reduction/smoothing on the contours, although I suppose it doesn't matter terribly for this prototype, and get it into Box2D, all of which I'll cover in Stage 2, including a quick 'n' dirty Cinder-based Box2D debug draw class. This is awesome, it's total Tron stuff, bringing the real into the digital and all that sort of sorcery. Once I get the Box2D stuff implemented, I'll stick a project up on github, until then, if you have specific questions, Tag The Inbox or leave a comment below, you are always welcome to try my Shogun Style...for reference, here's the complete update() and draw():

//Using Cinder-OpenCV and Intel Perceptual Computing SDK 2013

void segcvtestApp::update()

{

mContours.clear();

mMeshes.clear();

if(mPXC.AcquireFrame(true))

{

PXCImage *rgbImg = mPXC.QueryImage(PXCImage::IMAGE_TYPE_COLOR);

PXCImage *segImg = mPXC.QuerySegmentationImage();

PXCImage::ImageData rgbData, segData;

if(rgbImg->AcquireAccess(PXCImage::ACCESS_READ, &rgbData)>=PXC_STATUS_NO_ERROR)

{

mRGB=gl::Texture(rgbData.planes[0],GL_BGR,640,480);

rgbImg->ReleaseAccess(&rgbData);

}

if(segImg->AcquireAccess(PXCImage::ACCESS_READ, &segData)>=PXC_STATUS_NO_ERROR)

{

mSeg=gl::Texture(segData.planes[0],GL_LUMINANCE,320,240);

segImg->ReleaseAccess(&segData);

}

mSrcSurf = Surface(mSeg);

ip::resize(mSrcSurf, &mDstSurf);

mPXC.ReleaseFrame();

}

cv::Mat surfMat(toOcv(mDstSurf.getChannelRed()));

cv::findContours(surfMat, mContours, CV_RETR_LIST, CV_CHAIN_APPROX_SIMPLE);

for(auto vit=mContours.begin();vit!=mContours.end();++vit)

{

PolyLine2f cLine;

vector<cv::Point> contour = *vit;

for(auto pit=contour.begin();pit!=contour.end();++pit)

{

cLine.push_back(fromOcv(*pit));

}

Triangulator tris(cLine);

mMeshes.push_back(tris.calcMesh());

}

}

void segcvtestApp::draw()

{

// draw camera feed

gl::clear(Color( 0, 0, 0 ) );

gl::color(Color::white());

gl::draw(mRGB, Vec2f::zero());

//draw meshes

gl::enableWireframe();

gl::color(Color(0,1,0));

for(auto mit=mMeshes.begin();mit!=mMeshes.end();++mit)

{

gl::draw(*mit);

}

gl::disableWireframe();

}

void segcvtestApp::update()

{

mContours.clear();

mMeshes.clear();

if(mPXC.AcquireFrame(true))

{

PXCImage *rgbImg = mPXC.QueryImage(PXCImage::IMAGE_TYPE_COLOR);

PXCImage *segImg = mPXC.QuerySegmentationImage();

PXCImage::ImageData rgbData, segData;

if(rgbImg->AcquireAccess(PXCImage::ACCESS_READ, &rgbData)>=PXC_STATUS_NO_ERROR)

{

mRGB=gl::Texture(rgbData.planes[0],GL_BGR,640,480);

rgbImg->ReleaseAccess(&rgbData);

}

if(segImg->AcquireAccess(PXCImage::ACCESS_READ, &segData)>=PXC_STATUS_NO_ERROR)

{

mSeg=gl::Texture(segData.planes[0],GL_LUMINANCE,320,240);

segImg->ReleaseAccess(&segData);

}

mSrcSurf = Surface(mSeg);

ip::resize(mSrcSurf, &mDstSurf);

mPXC.ReleaseFrame();

}

cv::Mat surfMat(toOcv(mDstSurf.getChannelRed()));

cv::findContours(surfMat, mContours, CV_RETR_LIST, CV_CHAIN_APPROX_SIMPLE);

for(auto vit=mContours.begin();vit!=mContours.end();++vit)

{

PolyLine2f cLine;

vector<cv::Point> contour = *vit;

for(auto pit=contour.begin();pit!=contour.end();++pit)

{

cLine.push_back(fromOcv(*pit));

}

Triangulator tris(cLine);

mMeshes.push_back(tris.calcMesh());

}

}

void segcvtestApp::draw()

{

// draw camera feed

gl::clear(Color( 0, 0, 0 ) );

gl::color(Color::white());

gl::draw(mRGB, Vec2f::zero());

//draw meshes

gl::enableWireframe();

gl::color(Color(0,1,0));

for(auto mit=mMeshes.begin();mit!=mMeshes.end();++mit)

{

gl::draw(*mit);

}

gl::disableWireframe();

}